Redesigning the Voice Experience of an Amazon's Alexa through Co-creative AI Design.

Challenge: How can we co-create alternatives to the female-only voice used in the Amazon Echo and the Echo dot.

Outcome: Through community-driven research and design approaches, we developed an alternative voice design for Amazon's Alexa. Additionally, we suggested an approach to engage in transparency in voice design.

OUR HYPOTHESIS

Co-design of privacy settings and voice outputs can lead to a more inclusive conversational experience.

WE PROPOSED

Engaging in co-design could initiate a conversation about what is acceptable regarding privacy, transparency, and the gendering of products.

RESEARCH METHODS

We started by connecting with communities directly affected by the gendering of voice technologies, by engaging community conversations and one-on-one interviews. We created a voice design toolkit addressing questions around the gendering of voices, focusing on creating new sounds in voice design, and ways to audibly identify the utilization of emotions analysis during our voice interaction. We asked questions in person, through our toolkit, and remotely via phone and email.

Example of voice design toolkit question / response

Question: If you could add a material quality of the sound of your voice assistant, what would it sound like?

Response: “Papery. And with a rumble-tumbled consistency of the New York subway."

Co-Creating Thoughtful Voice Design

In the smart/virtual assistant space, humanizing and gendering a voice assistant can work to reinforce existing sexist norms, and also model behavior that has negative and permanent social ramifications for young children. Some major concerns include:

Offering a female-only voice attached to a product.

Utilizing emotions analysis without user consent.

Observing parents issue commands, models unhealthy communication behavior for their children and has significant ramifications for child development. Connecting that behavior to a female voice sends a very dangerous message to young children that they can speak to embodied, physical, women-identified humans in a specific way.

Having the ability to turn off the microphone at any time, and have an indicator that the mic is on.

Voice Design Research

DESIGN OPPORTUNITIES

Based off of our research, we have identified further opportunities in this design space:

In response to humanization and gendering in voice design, we created new ideas around the voice assistant space. In our redesign, the social, audio and physical factors resulted in digital and physical modifications to existing conversational products and experiences. We believe that inclusive design processes results in more interesting AI products, with less unwanted bias.

WHAT WE MADE

PROTOTYPES

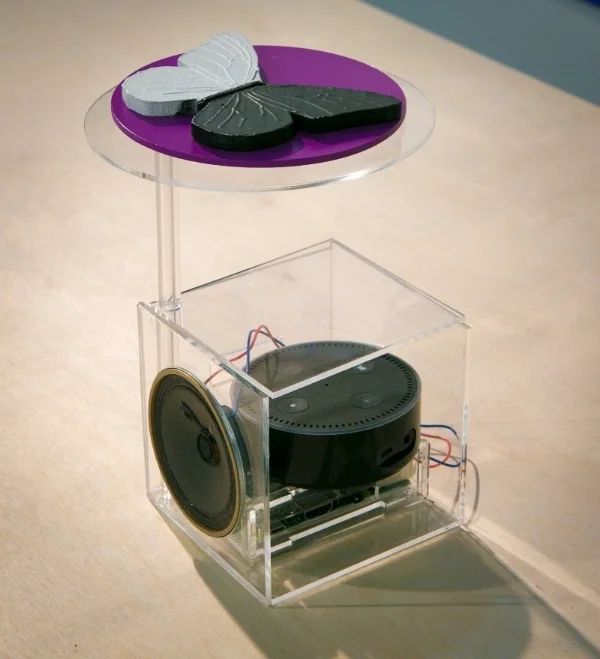

We built prototypes of suggested voice designs for public testing. These prototypes were placed in domes, to be placed over a newly designed container for the Amazon Echo Dot. Each design uses a different, reflected in the material product design.

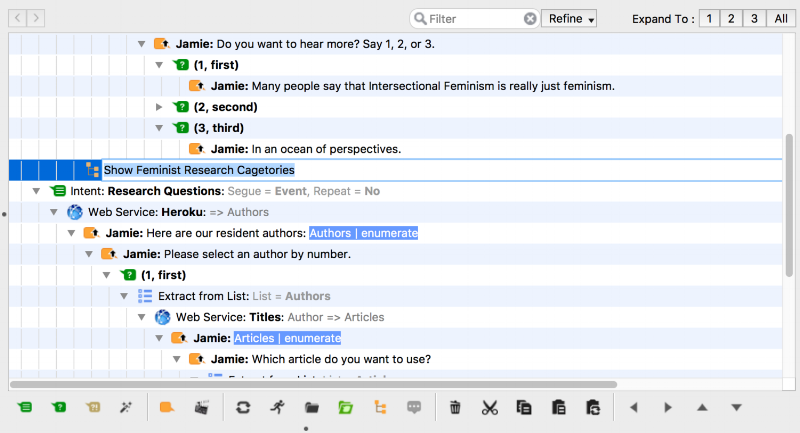

Prototyping the Redesign of Alexa's voice with a customized pullstring.ai template.

MATERIALS

We also created a prototype to test multiple voice interactions. We created a bot using pullstring.ai, to create a conversation tree with texts from three different feminist researchers. The bot would alter its perspective and content by author, materially changing its voice.

We used the AI Design tool - poieto to engage in AI Design for new conversation design products.

Audio of Pitch Change Prototype

PROCESS

System/Industry: Smart Assistants in the US Market.

Code: We engaged in prototyping new voices using Alexa-AVS and Pullstring.ai.

Material: We used Raspberry Pi with plug-ins and physically designed objects, to change Alexa's voice from the Echo Dot in the home environment.

The user experience changes because they gain more audio identities. We incorporated the idea of designing with "earcons", an audio indicator that can indicate the utilization of a particular algorithm or database. This research resulted in a new software feature that works in conjunction with an existing product (Echo Dot).

DESIGN RESEARCH INSIGHTS

There was much conversation and interest in gendered and non-gendered voice design. The majority of participants wanted the ability to change AI's gender (through a plugin, slider or voice command).

Participants wanted to design beyond the human voice, and incorporate different design materials inspired by sounds outside of the human voice.

Participants wanted to be fully aware if emotions analysis was being used.

Participants wanted earcons to identify the use of specific information such as the text of an author, location, or the utilization of a specific algorithm (such as emotions analysis).

OUTCOME

Through community-driven research and design approaches, we developed an alternative voice design for Amazon's Alexa. Additionally, we suggested an approach to engage in transparency in voice design.

LEARNINGS

Our collaborative research resulted in the following:

Participants became more aware of some of the design biases built into intelligent products.

Participants wanted the option of designing their own voices.

Participants did not want to be limited to designing voice that only designs gendered human voices. They wanted the ability to be creative and play with their voice design.

We engaged in Wizard of Oz prototyping of emotions analysis, because the wake word and beyond verbal API were incompatible due to the duration required for accurate sentiment analysis. Participants indicated they would like the ability to modify the output of the voice when private information is collected.

Our co-design research of privacy settings and outputs prompted thinking around inclusive and playful conversation design experiences.

Industry: Smart Assistants

Expertise: AI Design, Interaction Design, Participatory Design

Thank you

Special thanks to developer Debra Do for her development on this project.

PROJECT DATE: August 2016 - CURRENT